Did you know that over 70% of applications today utilize concurrent programming techniques to enhance performance? In a world where a single server can handle thousands of concurrent connections, understanding the nuances of concurrency and parallelism is crucial for iOS App Performance Optimization. Surprisingly, most developers don’t differentiate between the two concepts, leading to inefficiencies in code execution.

Table of Contents

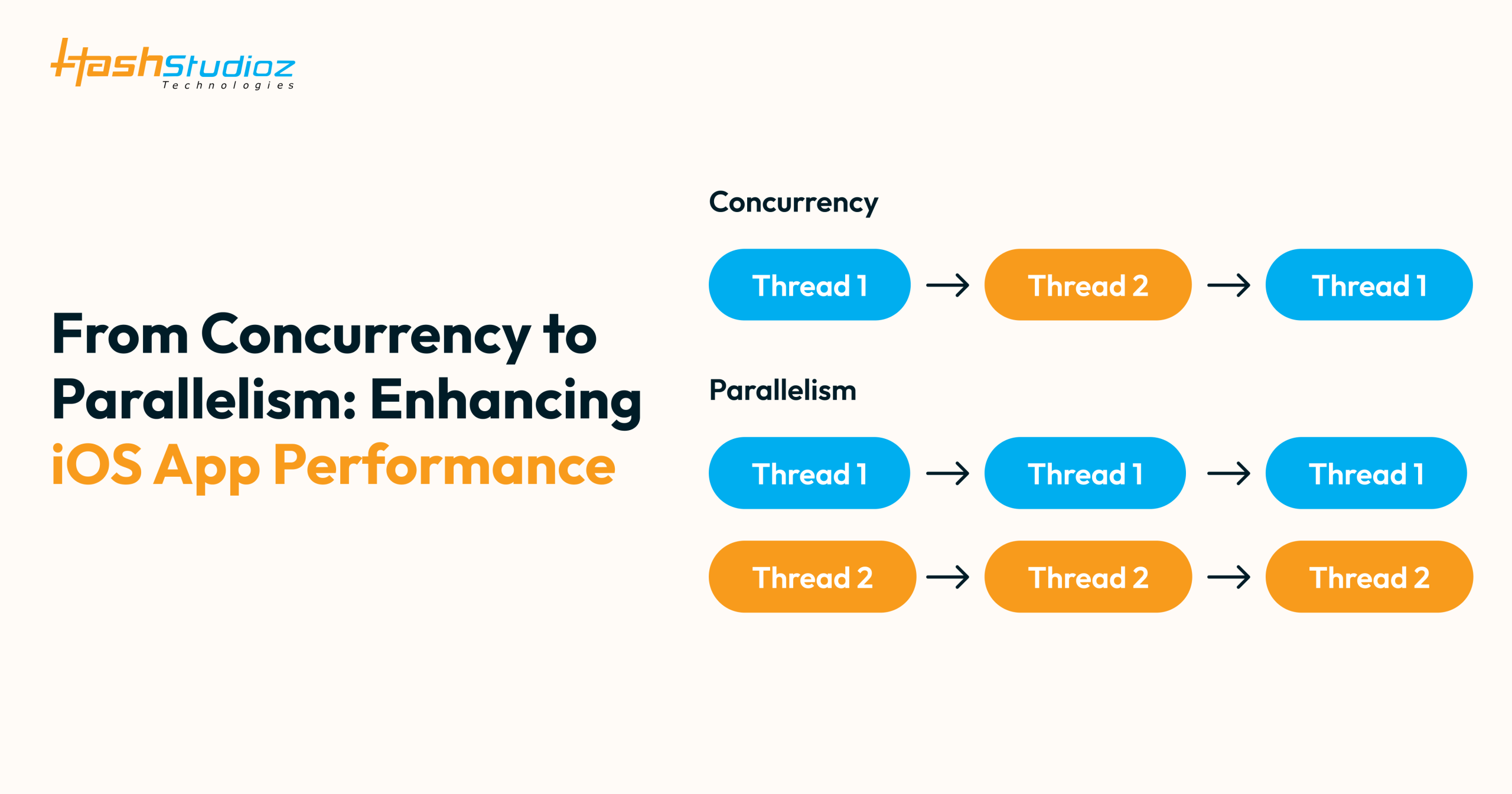

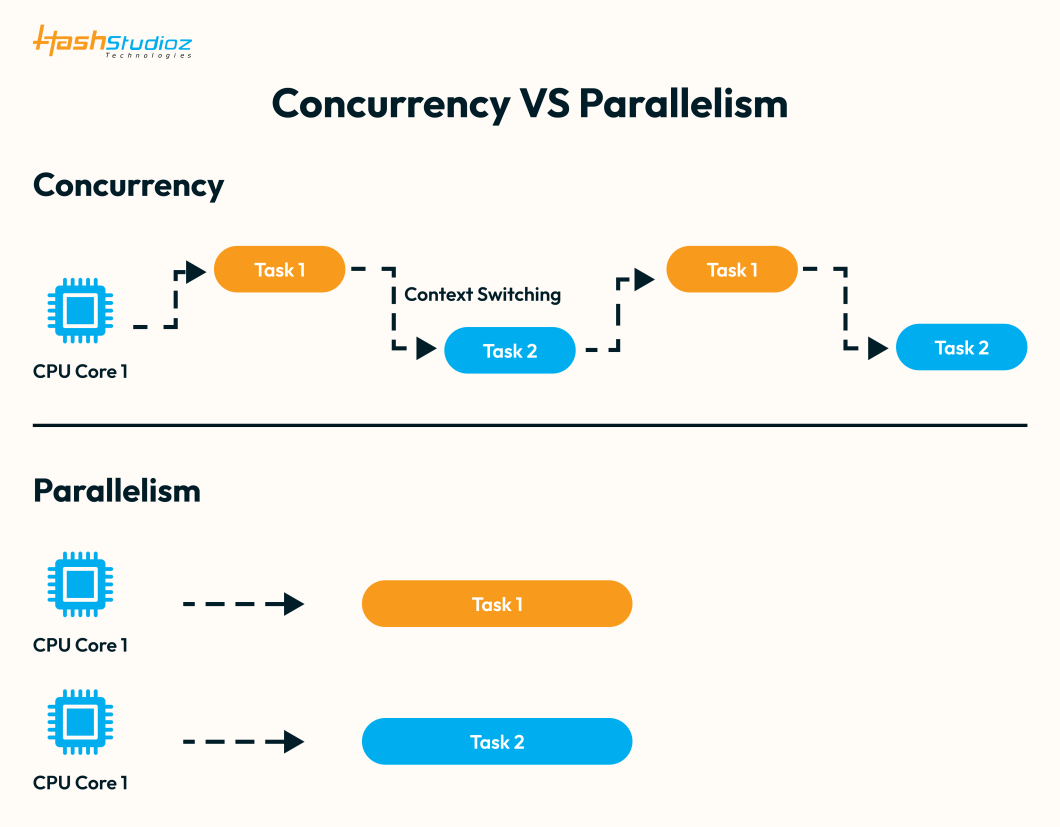

Concurrency

Concurrency is the execution of the multiple instruction sequences at the same time because that is how the work is done using the instruction sequences so when multiple instructions are taking place are being executed at same time concurrency is happening.

For example: if we have two tasks here Task 1 and Task 2 and both of them are done at the same time but or at least it is appearing that both of them are taking place at the same time we can say that tasks are being executed concurrently or the phenomenon is concurrency.

Parallelism

Now, comes to the point what is parallelism because in concurrency too it is appearing that two tasks are taking place at the same time so what’s the difference with parallelism.

In parallelism, the dictionary says the literal meaning of parallelism is that the state of being parallel or of corresponding in some way. To understand this in a better way let’s have a look at this super mom it is not just appearing that she is doing six jobs at the same time but they are actually taking place at the same time.

The difference with concurrency was that it was appearing that the telephone operator is responding to the two calls at the same time but actually he was answering one call at a time so in parallelism multiple processing elements are used simultaneously for solving any problem basically It involves multiple resources more than one resource we can say that the problems are broken down in the instructions and the instructions are executed simultaneously at the same time using multiple resources so more resources are involved in parallelism.

Concurrency in iOS

- Achieving multithreading by creating threads manually

- Grand Central Dispatch

- Operation Queue

- Modern Concurrency in Swift

Manual Thread Attribution

It is not the recommended way and we will discuss the cons ahead but in the Pro section we can say that it is the very raw approach of creating the threads of achieving the multi threading and it gives us more control more customisation we can start the thread cancel the thread change this text size basically we are dealing with a very raw API directly with the threads there’s no abstract layer above the thread we are directly in contact of the thread and then the entire control is within the developer’s hand so these are benefit bits of directly dealing with the threads.

Create Custom Thread

class CustomThread {

func createThread() {

let thread: Thread = Thread(target: self, selector: #selector(threadSelector),

object: nil)

thread.start()

}

@objc func threadSelector() {

print(“Custom thread in action”)

}

}

let customThread = CustomThread()

customThread.createThread()Grand Central Dispatch

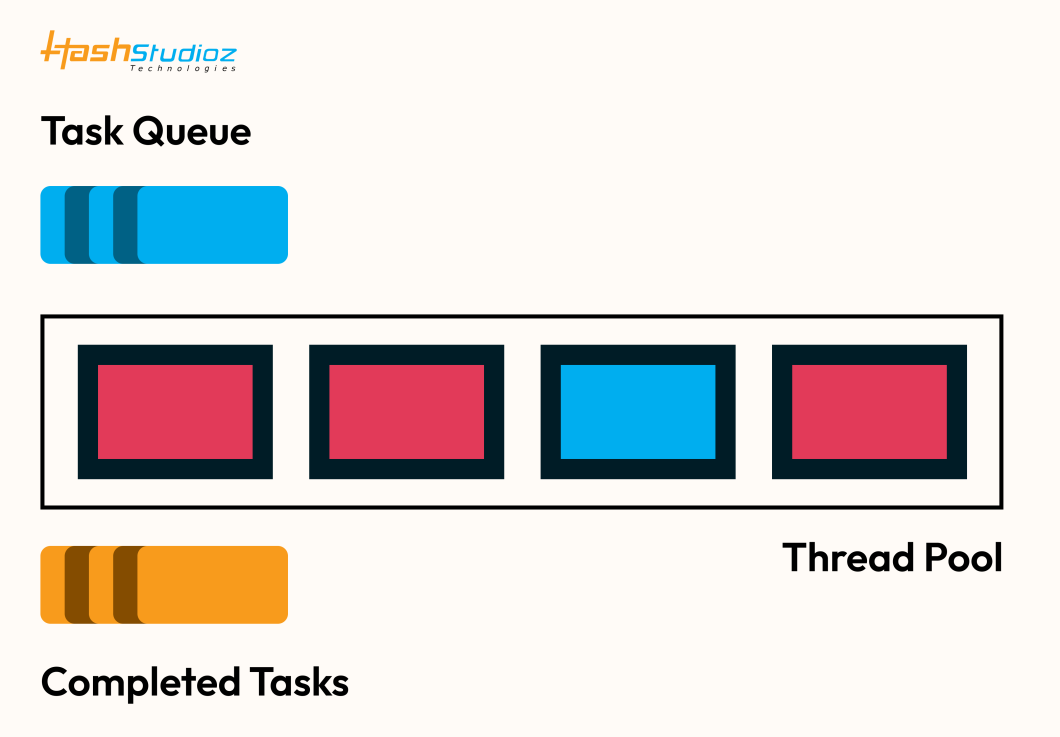

- It is commonly referred as dispatch.

- GCD is a queue based API that allows to execute closures on workers pools in the First-in First out order.

- We can submit the tasks those tasks are submitted in a queue and then GCD is basically an API. it is something which takes care of that queue and our submitted tasks are processed in FIFO Order.

- GCD Decides which thread is used to execute a task is handled by Grand central Dispatch, not the developer and execute them on an appropriate dispatch queue

A dispatch Queue executes tasks either serially or concurrently but always in FIFO Order.

- An Application can submit a task to queue in the form of blocks either synchronously or asynchronously

Synchronous vs Asynchronous

- Synchronous: Block the execution till this Task is completed.

- Asynchronous: Continue the execution of current task, while new task will execute asynchronously.

Control return from the method immediately and starts executing other task.

Serial Queue VS Concurrent Queue

- Serial: One task at a time

- Concurrent: Multiple tasks at a time.

- Even for concurrent, tasks will be dequeued serially. In a fixed order i.e. FIFO.

Serial/Concurrent vs Sync/Async

- Serial/Concurrent affects the destination queue to which you are dispatching.

- Sync/Async affects the current thread from which you are dispatching.

Types of dispatch Queues

- Main Queue

- System provided Global Concurrent Queues

- Custom Queues

Main Queue:

- It is system created queue

- Serail by nature

- It uses Main thread. Main queue is the only queue available which uses the main thread the access to main thread is not available to any other queue be it system provided or be it user created also UI Kit is tied to main thread.

DispatchQueue.main.async {

Print(Thread.isMainThread ? “Execution on Main Thread” : “Execution on other threads”)

}Run above command, the output will be Execution on Main Thread.

Global Concurrent Thread

- System Created queue

- Concurrent by nature

- Do not use Main Thread

- Priorities are decided through QoS(Quality of service).

DispatchQueue.global().async {

Print(Thread.isMainThread ? “Execution on Main Thread” : “Execution on global concurrent queue”)

}Run above command, the output will be Execution on global concurrent queue.

Dispatch Group

When we need to group multiple tasks together and get a callback once all of them are completed, we often encounter this situation in day-to-day work. For example, on a splash screen, we may want to make multiple API calls and only proceed to the home screen once all of them have finished. Similarly, if we’re downloading multiple images, we want to proceed when all downloads are complete. Another case could be fetching data from a database or API while showing a progress indicator, and only proceeding when both the progress animation and the data download have finished. In such scenarios, where we need to group two or more tasks together and then perform an action, a dispatch group is the solution.

import Foundation

class APIClient {

func fetchUserDetails(completion: @escaping (String) -> Void) {

// Simulate network delay

DispatchQueue.global().async {

sleep(1) // Simulating network call

completion("User Details")

}

}

func fetchUserPosts(completion: @escaping ([String]) -> Void) {

// Simulate network delay

DispatchQueue.global().async {

sleep(1) // Simulating network call

completion(["Post 1", "Post 2", "Post 3"])

}

}

func fetchUserComments(completion: @escaping ([String]) -> Void) {

// Simulate network delay

DispatchQueue.global().async {

sleep(1) // Simulating network call

completion(["Comment 1", "Comment 2"])

}

}

func fetchAllData(completion: @escaping (String, [String], [String]) -> Void) {

let dispatchGroup = DispatchGroup()

var userDetails: String = ""

var userPosts: [String] = []

var userComments: [String] = []

dispatchGroup.enter()

fetchUserDetails { details in

userDetails = details

dispatchGroup.leave()

}

dispatchGroup.enter()

fetchUserPosts { posts in

userPosts = posts

dispatchGroup.leave()

}

dispatchGroup.enter()

fetchUserComments { comments in

userComments = comments

dispatchGroup.leave()

}

// Notify when all API calls are complete

dispatchGroup.notify(queue: .main) {

completion(userDetails, userPosts, userComments)

}

}

}

// Usage

let apiClient = APIClient()

apiClient.fetchAllData { userDetails, userPosts, userComments in

print("User Details: \(userDetails)")

print("User Posts: \(userPosts)")

print("User Comments: \(userComments)")

}Dispatch WorkItem

- It is use to cancelling the tasks which is not executed yet using Dispatch work item.

- Encapsulates a block of code

- It can be dispatched on both DispatchQueue and DispatchGroup

- Provides the flexibility to cancel the task(Unless execution has started.)

- .cancel: if it is set ‘true’ before execution, task won’t execute.

If work item is cancelled during execution, ‘cancel’ will return true but execution won’t abort.

Dispatch Barrier

dispatch barrier is used with Grand Central Dispatch (GCD) to create a synchronization point for concurrent tasks. It allows you to execute a block of code in a way that ensures no other concurrent tasks can access a shared resource while that block is executing. This is particularly useful when you want to perform a write operation to a resource while ensuring that no read or write operations occur simultaneously.

import Foundation

let concurrentQueue = DispatchQueue(label: "com.example.queue", attributes: .concurrent)

var sharedResource = [Int]()

// Writing data using a barrier

concurrentQueue.async(flags: .barrier) {

// This block will execute exclusively

for i in 1...5 {

sharedResource.append(i)

}

print("Data written: \(sharedResource)")

}

// Reading data

for _ in 1...3 {

concurrentQueue.async {

// This block can execute concurrently

print("Current resource: \(sharedResource)")

}

}

// Give some time for tasks to complete before main thread exits

DispatchQueue.global().asyncAfter(deadline: .now() + 2) {

print("Final resource state: \(sharedResource)")

}

// Keep the playground running

RunLoop.main.run()Dispatch Semaphore

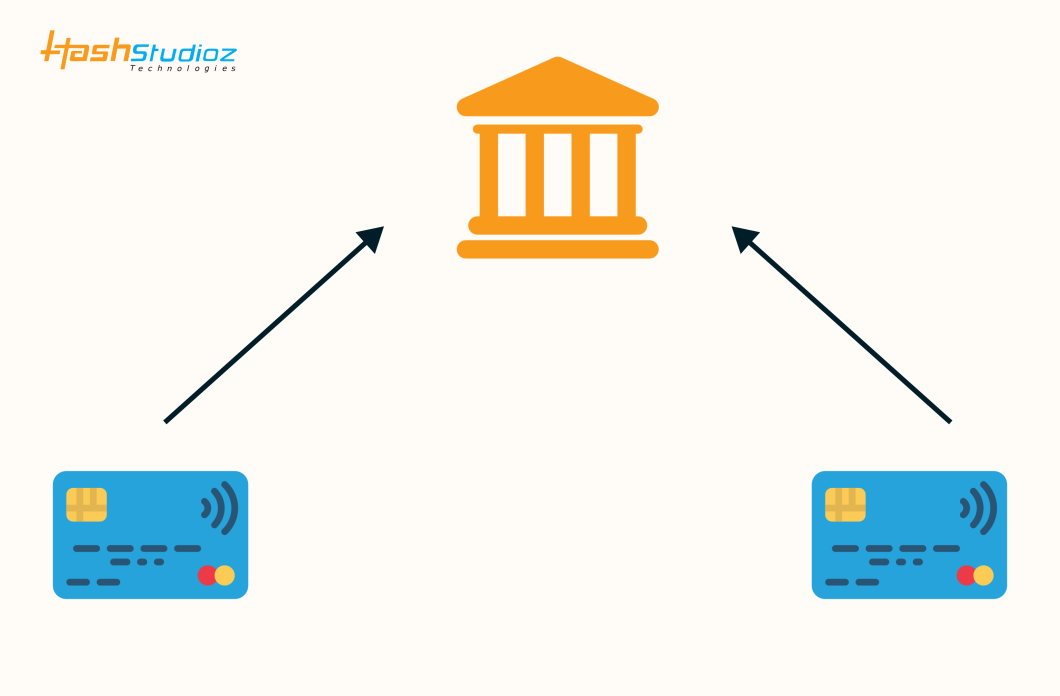

It is another one of the brilliant ways to deal with the problems of race conditions the data inconsistency related issues the access to the critical section.

Example:- In a bank, a joint account has multiple account holders, each with their own debit card. These cards can be used to withdraw money from the same account. Now, if two account holders attempt to withdraw money from different machines at exactly the same time, and the bank doesn’t handle concurrent transactions properly, it can lead to issues. For example, if both account holders are able to withdraw money simultaneously, even if the withdrawal amount exceeds the balance, both may still receive cash. This problem can be solved using a dispatch barrier. However, you may wonder, if dispatch barriers are available, what’s the benefit of using dispatch semaphores? Semaphores are more powerful and provide greater control and customization when dealing with race conditions.

import Foundation

class BankAccount {

private var balance: Double

private let semaphore: DispatchSemaphore

init(initialBalance: Double) {

self.balance = initialBalance

self.semaphore = DispatchSemaphore(value: 1) // Only one access at a time

}

func deposit(amount: Double) {

print("Depositing \(amount)...")

semaphore.wait() // Wait for access

// Simulate some processing time

sleep(1)

balance += amount

print("Deposited \(amount). New balance: \(balance)")

semaphore.signal() // Release access

}

func withdraw(amount: Double) {

semaphore.wait() // Wait for access

if amount <= balance {

print("Withdrawing \(amount)...")

// Simulate some processing time

sleep(1)

balance -= amount

print("Withdrew \(amount). New balance: \(balance)")

} else {

print("Insufficient funds for withdrawal of \(amount). Current balance: \(balance)")

}

semaphore.signal() // Release access

}

}

// Create a bank account with an initial balance

let jointAccount = BankAccount(initialBalance: 1000.0)

// Create a global dispatch queue

let dispatchQueue = DispatchQueue.global()

// Simulate multiple account holders accessing the account

let operations = [

{ jointAccount.deposit(amount: 200) },

{ jointAccount.withdraw(amount: 150) },

{ jointAccount.withdraw(amount: 900) },

{ jointAccount.deposit(amount: 50) },

{ jointAccount.withdraw(amount: 100) }

]

// Dispatch operations concurrently

for operation in operations {

dispatchQueue.async(execute: operation)

}

// Allow some time for operations to complete before exiting the main thread

sleep(10)Also Read:– 5 iOS app testing tools to consider in 2024

Dispatch Semaphore Benefits

1. Flexible Resource Management

- Semaphores allow you to control access to a limited number of resources (e.g., connections, threads). You can specify how many threads can access the resource simultaneously.

- Barriers, on the other hand, are more rigid, allowing only one write operation at a time while permitting multiple read operations.

2. Granular Control

- With semaphores, you can fine-tune the level of concurrency based on your specific needs (e.g., if you want 3 threads to work simultaneously, you can easily set the semaphore count to 3).

- Barriers don’t provide this level of granularity; they either block for a write operation or allow concurrent reads.

3. Blocking and Signaling

- Semaphores provide a clear mechanism for waiting and signaling, allowing threads to explicitly wait for access to resources and to signal when they’re done.

- Barriers do not have this explicit signaling mechanism; they automatically manage access based on their defined behavior.

4. Versatility

- Semaphores can be used in a wider range of scenarios beyond just read/write operations, such as throttling, managing access to limited resources, or controlling the flow of tasks.

- Barriers are specifically designed for synchronizing reads and writes in concurrent collections, limiting their application scope.

5. Reduced Overhead

- In scenarios where you need multiple threads to access a resource concurrently, semaphores can reduce contention and waiting time, leading to potentially better performance.

- Barriers can introduce overhead due to their nature of blocking other operations until the barrier is cleared.

Key Differences Between Concurrency and Parallelism in Ios Development:

Concurrency and parallelism are crucial concepts in iOS development, especially when dealing with tasks that involve multitasking and performance optimization. Here’s a breakdown of the differences between the two:

| Concept | Concurrency | Parallelism |

| Definition | Ability to manage multiple tasks at once. | Simultaneous execution of multiple tasks. |

| Core Usage | Can occur on a single-core processor through task switching. | Requires multiple cores for true simultaneous execution. |

| Execution | Tasks run independently, improving responsiveness. | Multiple threads run at the same time for better performance. |

| Programming | Implemented using asynchronous models (e.g., DispatchQueue). | Utilizes GCD and NSOperation for task distribution. |

| Architecture | Commonly used in event-driven architectures | Focuses on compute-intensive tasks. |

| UI Impact | Ensures UI remains responsive (e.g., background tasks). | Speeds up heavy data processing tasks (e.g., image processing). |

| Key Tools | DispatchQueue, and OperationQueue for managing asynchronous tasks. | GCD, NSOperation for parallel execution. |

How HashStudioz Improves iOS App Performance Through Effective Use of Concurrency and Parallelism

At HashStudioz, we understand that modern iOS applications must deliver exceptional performance and responsiveness. By effectively leveraging concurrency and parallelism, we help developers optimize their apps to meet user expectations. Here’s how we do it:

1. Thorough Performance Audits

We begin by conducting comprehensive performance audits of your existing iOS applications. Our team identifies bottlenecks and inefficiencies, assessing how well your app utilizes concurrency and parallelism.

2. Customized Optimization Strategies

Based on our findings, we develop tailored optimization strategies designed to improve app performance. This includes recommending best practices for implementing concurrency and parallelism, ensuring that tasks run efficiently without blocking the main thread.

3. Hands-On Training and Workshops

Our workshops and training sessions empower developers with the knowledge and skills to implement concurrency and parallelism effectively. We cover topics like Grand Central Dispatch (GCD), NSOperation, and asynchronous programming, providing practical insights and hands-on experience.

4. Code Samples and Best Practices

We provide access to a library of code samples and reusable templates, illustrating best practices for integrating concurrency and parallelism into your projects. These resources help developers quickly implement solutions that enhance app performance.

5. Expert Consultation

Our team of experts is available for one-on-one consultations, offering personalized guidance on addressing specific performance challenges. We work closely with you to ensure your app utilizes concurrency and parallelism to its fullest potential.

6. Community Engagement

We foster a community of developers who share experiences and solutions related to app performance. Our forums and Q&A sessions provide ongoing support, allowing developers to learn from one another and stay updated on best practices.

7. Continuous Learning and Updates

We stay at the forefront of iOS development trends. We regularly update our resources and training materials to reflect the latest advancements in concurrency and parallelism, ensuring our clients are always informed.

At HashStudioz, we don’t just focus on optimizing performance; we’re passionate about delivering excellence in every aspect of iOS app development. Our diverse range of services is crafted to empower developers at every stage of the app development journey, ensuring you have the support you need to bring your vision to life.

Your feedback and questions are important to us! If you have any queries or concerns, feel free to reach out. Your feedback is very invaluable to me.